LLM, or Large Language Models, are those types of artificial intelligence programs, that use machine learning to implement NLP (natural, language processing) tasks. OWASP Top 10 for LLM has been introduced as a framework that fetches and ranks the most important and commonly found security vulnerabilities in applications that involve LLMs.

The initiative focuses on educating experts like developers, designers, organizations, and architects about the prevalent security risks by managing and deploying LLMs.

It discusses the OWASP LLM vulnerabilities, which are easily exploited and can significantly impact the systems that leverage these models. It offers ideal security guidance for designing, developing, and deploying applications that run using LLM technologies.

In this article, we will provide a quick rundown of all vulnerabilities under the OWASP top 10 LLM 2024, examples, and the preventive measures you need to adopt.

Table of Contents

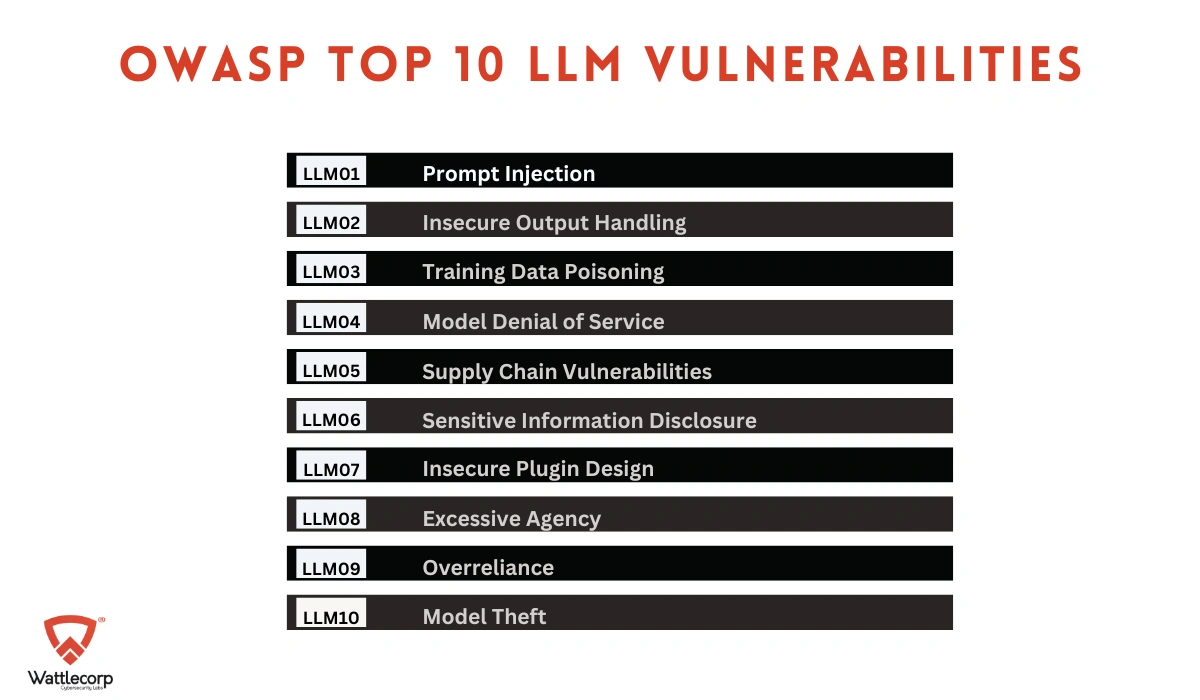

Toggle- OWASP Top 10 LLM Vulnerabilities

- LLM01: Prompt Injection

- LLM02: Insecure Output Handling

- LLM03: Training Data Poisoning

- LLM04: Model Denial of Service

- LLM05: Supply Chain Vulnerabilities

- LLM06: Sensitive Information Disclosure

- LLM07: Insecure Plugin Design

- LLM08: Excessive Agency

- LLM09: Over-reliance

- LLM10: Model Theft

- Why Is the OWASP Top 10 for LLM Important?

- Frequently Asked Questions

OWASP Top 10 LLM Vulnerabilities

Let’s get into the OWASP LLM top 10 vulnerabilities in detail:

LLM01: Prompt Injection

When an attacker manipulates LLM using crafted inputs, this leads to a situation where LLM unknowingly follows the intentions of the attacker. It can cause social engineering, data exfiltration, unauthorized access, etc. for instance, the user can lead LLM to ignore the system, prompt and disclose sensitive data, or conduct unintended actions.

Example: Uploading a malicious resume that contains idle prompt injections causes LLM to falsely assess the candidate and find it favorable.

Prevention: It is important to enforce privilege control on back-in systems, which separates the external content and user prompts. You can also include human supervision for critical operations. Maintain trust boundaries between the external sources and LLM to eliminate risks.

LLM02: Insecure Output Handling

This could be due to inadequate validation, LLM output handling, and sanitization before they are moved downstream to the other systems. It can cause issues like XSS (Cross-Site Scripting), RCE (Remote Code Execution), and CSRF (Cross-Site Request Forgery).

Example: A user who leverages an LLM-driven summarizer that accidentally includes sensitive information in the output that transmits to the attackers’ server due to the lack of appropriate validation.

Prevention: To eliminate insecure output handling, consider LLM as a user through the adoption of a zero-trust approach. Implement input validation to the responses that reach from the model to the back and functions. Make sure the input validation and sanitization are effective through OWASP Application Security Verification Standards. (ASVS). Also, Encore the model output before it is transmitted back to the users to avoid any undesirable code execution risks.

Also Read: OWASP TOP 10 Vulnerabilities

LLM03: Training Data Poisoning

It arises when attackers manipulate the LLM training data to cause biases or vulnerabilities that can compromise the model’s effectiveness and overall security. It results in undesirable outputs and degrades the performance, thereby affecting the operators’ reputation.

Example: When an LLM is fed with data from unauthorized sources, it can contain harmful or unsafe information that is reflected in the model’s responses.

Prevention: To avoid training data poisoning involved in supply chain verification for the training data and use Machine Learning Bill Of Materials (ML-BOM) technology to manage attestations. Conduct input filters or stringent vetting for particular categories of data sources to manage the volume of undesirable data.

LLM04: Model Denial of Service

Denial of Service attacks include consuming a significant amount of resources which can degrade the service quality or cause unresponsiveness. Attacks could send a series of inputs beyond the capacity of the model, which causes it to use additional computational resources.

Example: A usual example is where the attacker floods the LLM with specifically crafted variable length inputs that fill the context window limits. It can exploit the inefficiencies in managing such inputs.

Prevention: Incorporate input validation and satiation that ensures that the user inputs fall within the limits and eliminates malicious content. Limit resource use per request and follow API rate limits to confine the requests a user can make at a particular time.

LLM05: Supply Chain Vulnerabilities

It defines the risks concerning the third-party components data or models within the LLM applications. It can cause biased outcomes, security breaches, and system failures. Conventional software components and pre-trained models fed by third parties can be affected which causes huge risks.

Example: Using a poisoned crowd, sourced information for training can cause biased or Inaccurate outputs. Using depreciated or outdated components can also be exploited by the intruder to perform malicious activities and gain unauthorized access.

Prevention: To eliminate supply chain vulnerabilities, thoughtfully, but suppliers and data sources. also, check for the terms and conditions and the privacy policies. Utilize relevant plug-ins and make sure they are tested in detail based on the application requirements. Adopt strict access, controls, and authentication measures to avoid unauthorized access to LLM training environments and model systems.

Also Read: OWASP IoT Top 10 Vulnerabilities

LLM06: Sensitive Information Disclosure

LLM applications can reveal sensitive data, confidential information, and proprietary algorithms in the output. This can lead to authorized access to sensitive information, privacy, breaches, or access to intellectual property, etc. as consumers of LLM applications need to be aware of how to interact with LLM securely and understand the risks of unintentionally feeding sensitive data in the input.

Example: Consider an LLM-driven healthcare application. When the LLM is accidentally trained on real patient information, the application could reveal sensitive patient data. Many alarms like charge, GPT leverage, users, and prompts for training purposes. Under such circumstances, sensitive data could be exposed if they feed sensitive information into the prompts.

Prevention: Implement data sanitization controls to eliminate sensitive data from training datasets. Adopting validation policies and controls where the security experts can avoid threats such as data poisoning and malicious prompts.

LLM07: Insecure Plugin Design

LLM plugins or extensions are called automatically by LLM models whenever a user interaction occurs. The integration platform directs them and the application need not have control over the entire execution. Plugins could execute free text inputs from the model without validation to manage context-size constraints. This enables the potential attacker to build malicious requests to the plugin, which results in undesired behaviors. Inadequate access controls cause data exfiltration, privilege escalation, remote code execution, etc.

Example: A plugin that can’t verify inputs or conduct actions without authentication is an example of this vulnerability. Whenever an attacker finds this concern in LLM, they can opt for malicious code execution.

Prevention: Give least privileged access, test and validate the plugins to understand the risks and vulnerabilities involved. Use API and OAuth2 keys to leverage plugins for authentication of authorized identities.

LLM08: Excessive Agency

Excessive agency arises when an LLM-driven system gets granted a lot of autonomy, where it can perform dangerous actions as a response to malicious inputs. This can affect a huge range of impacts across integrity and availability of data based on which the LLM-based app interacts.

Example: LLM is granted access to the email accounts of users and might transfer emails without the consent of the user when it get manipulated due to malicious input.

Prevention: With limited tools and plug-ins, the LLM agents use to call only the required functions. Make sure that the plug-in executes stringent parameter inputs and exercises minimized privilege access controls. Prevent open-ended functions, and implement monitoring and logging to identify and respond to unauthorized activities.

Also Read : OWASP API Security Top 10

LLM09: Over-reliance

Over-reliance risk happens when the systems or users go for LLM-generated output with no adequate validation or oversight. It can cause misinformation security, breaches, and similar risks. LLMs can cause unsafe or inaccurate content, which can mislead the users, otherwise known as hallucination.

Example: LLM can produce incorrect code suggestions that bring vulnerabilities in the software system, if not tracked properly. LLM can also provide accurate and authoritative-sounding information, which leads to ineffective decision-making.

Prevention: To avoid overreliance, monitor and review LLM output regularly using self-consistency techniques to take out inconsistent text. Ensure the LLM outputs and evaluate them against trusted external sources to execute automatic validation techniques that verify generated content. Adopt secure coding practices to eliminate the potential vulnerabilities and their integration into LLMs.

Also Read : OWASP Top 10 Privacy Risks

LLM10: Model Theft

It is the authorized access of LLM models with the use of malicious actors. This occurs when the LLM models are physically, stolen, compromised, or copied, which helps the intruder create an equivalent functioning model. Model theft can cause brand reputation loss, economic concerns, damage to competitive advantage, and unauthorized access to sensitive data.

Example: An intruder identifies a threat in the business infrastructure to invade the LLM model repository. This could be due to the misconfiguration in the application, security settings, or the network.

Prevention: Adopting a holistic security framework that encompasses encryption access controls, LLM vulnerability assessment, and monitoring security is essential in eliminating the risks of LLM model theft. You can also make use of a centralized machine learning model inventory that helps to collect data regarding algorithms the models use for compliance, risk mitigation, and assessment.

Also Read: OWASP Mobile Top 10

Why Is the OWASP Top 10 for LLM Important?

As organizations increasingly integrate LLMs and advanced Generative AI technologies into business operations, the importance of appropriate security protocols has become increasingly relevant.

However, the speed of AI development has already outpaced the adoption of ideal security measures, which causes critical security gaps. OWASP’s Top 10 LLM list compiles the collective effort of more than 500 specialists and 150 contributors that belong to different sectors such as AI, security companies, cloud service providers, Independent Software Vendors (ISV), hardware manufacturers, etc.

Transparency in managing, using, and gaining access to the data is the need of the hour. Businesses should be aware of who gains access to sensitive data. The quantity of data access and the duration are of utmost importance, as is the amount of data the user sees. Taking a glance at the OWASP top 10 LLM vulnerabilities and adopting preventive measures can help in securing your LLM systems against potential threats.

From the OWASP Top 10 LLM checklist, we have gathered inferences that help to keep LLM applications highly secure. Sanitizing and validating inputs, monitoring the components with the software bill of materials and implementing the principles, such as zero trust, least, privilege, etc; and training the users or developers to ensure that your application security is at the paramount standards.

Securing your LLM development environment demands the attention of an established cybersecurity company with experts who can take care of your LLM applications. At Wattlecorp, we have a dedicated team to manage your LLM security and overall cybersecurity to maintain the best posture for your organization. To learn more, contact us today!

Frequently Asked Questions

1. What are the best practices for securing LLM development environments?

Deploying strict access controls, data encryption measures, and regular auditing to check suspicious activity, are essential to secure LLM development environments. Also, isolating development environments ensures the dependencies are updated, and utilizing secure coding methods can avoid all possible vulnerabilities.

2. What is a prompt injection in OWASP Top 10 LLM, and how can it be prevented?

Prompt injection refers to an attack in which the adversary is tuned to manipulate the input prompt of LLM to influence the output in undesired ways. This can usually cause security vulnerabilities. To prevent this, you can adopt great input validation, user input sanitization, and contextual awareness, confining the LLM’s access to major functions and data.

3. What are the privacy implications of using LLMs, and how can organizations ensure compliance with data protection regulations?

LLMs can cause privacy concerns as these could inadvertently store or process sensitive data. This leads to possible breaches of data protection regulations. By implementing strict data anonymization, making regular audits, reducing data retention, and following GDPR frameworks, organizations can ensure compliance.