Consider modern assistants like GPT (Generative Pre-trained Transformer) from Open AI and Google Gemini. Such tools have transformed how we interact with Artificial Intelligence (AI), helping applications implement translation, coding assistance, and the content creation process.

Large Language Model or LLM is a sophisticated Machine Learning model equipped to learn, generate, and interpret natural human language. Developed on neural networks based on the transformer architecture, these models learn from huge amounts of text data, which helps them produce text that highly mimics the patterns and styles of human writing.

So, what is LLM security? As LLMs get into the mainstream applications, they become an integral part of the sensitive applications, namely, healthcare, legal, and finance segments. LLM security vulnerabilities can cause privacy breaches, misinformation, information manipulation, etc; which causes serious LLM security risks to organizations and individuals.

With the advent and increased dependence on LLM in Cybersecurity, the exposure to cyber threats also increases. Cyber attackers can utilize LLM vulnerabilities to intrude into systems and perform attacks like model theft, data poisoning, unauthorized access, etc. Using robust security measures is necessary to secure the integrity of the models and the data under process.

Table of Contents

ToggleWhy is LLM in Cybersecurity Essential?

1. Data Breach

LLMs store and process huge quantities of data, making them the core targets for data breaches. Hackers can gain unauthorized access, modify the model inputs and outputs, or even compromise the integrity of the model and its data confidentiality. Effective security measures are necessary to prevent such situations and make sure the LLM applications are trustworthy. These data breaches in LLM implications travel beyond a mere data loss to include reputational and regulatory damage. Entities that use LLMs should take the best data protection strategies, continuous security audits, and incident response plans to eliminate these risks.

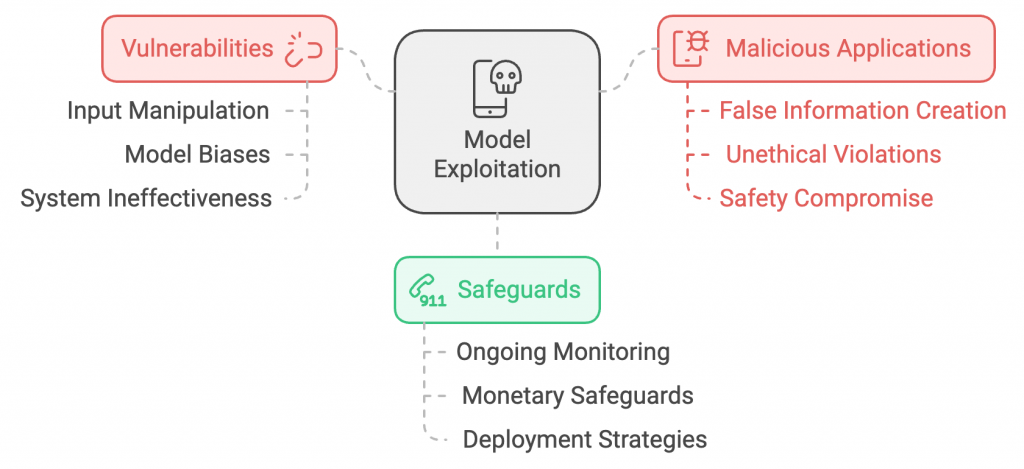

2. Model Exploitation

Model exploitation arises when the attackers find and utilize the vulnerabilities within the LLMs, especially for malicious applications. It can cause harmful results which compromise the safety and effectiveness of the system. Also, the attackers might create false information through the manipulation of inputs and the unethical violation of model biases. These types of vulnerabilities emphasize the need for ongoing model behavior, monetary, and deploying safeguards against such exploits.

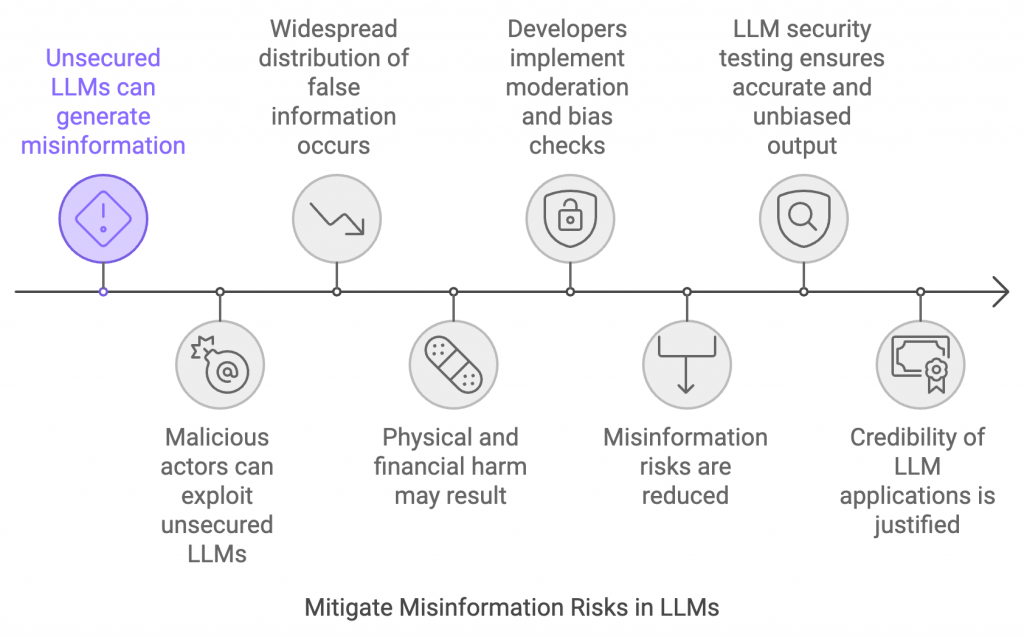

3. Misinformation

LLMs can generate and spread misinformation if not secured and monitored appropriately. If malicious actors utilize this opportunity, it can cause widespread distribution of false information. The models can also cause physical and financial harm in certain cases, for example when they provide wrong health or financial advice maliciously. Hence, developers need to implement moderation techniques and bias checks to reduce the risks of misinformation. Making sure that the output stays accurate and unbiased with LLM security testing justifies the credibility of LLM applications.

4. Ethical and Legal Concerns

LLM technology misuse can cause critical legal and ethical problems. For example, generating biased content can lead to legal concerns and a lack of organizational reputation. You need to integrate compliance checks and ethical practices during LLM deployment. Other legal risks involve adhering to international data protection standards like GDPR. Organizations should make sure that using LLMs follows all mandatory laws and keeps transparency with users concerning data handling practices.

Who holds the Responsibility for LLM Security?

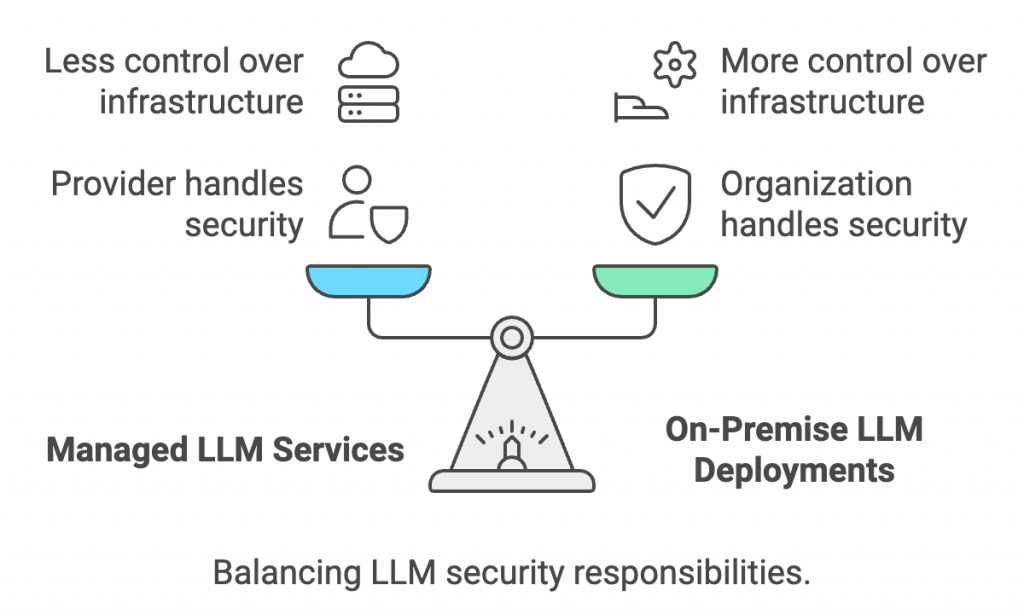

Many organizations and entities use LLMs through managed services or websites, such as Gemini and ChatGPT. Under such circumstances, the prime responsibility for the infrastructure and model security falls on the service provider.

But when organizations follow on-premise LLM deployment, for instance, using open source options such as LLaMA or on-premise, commercial options, these have extra security burdens. Here the organizations that operate and deploy the model opt for shared responsibility to safeguard the integrity and the entire infrastructure.

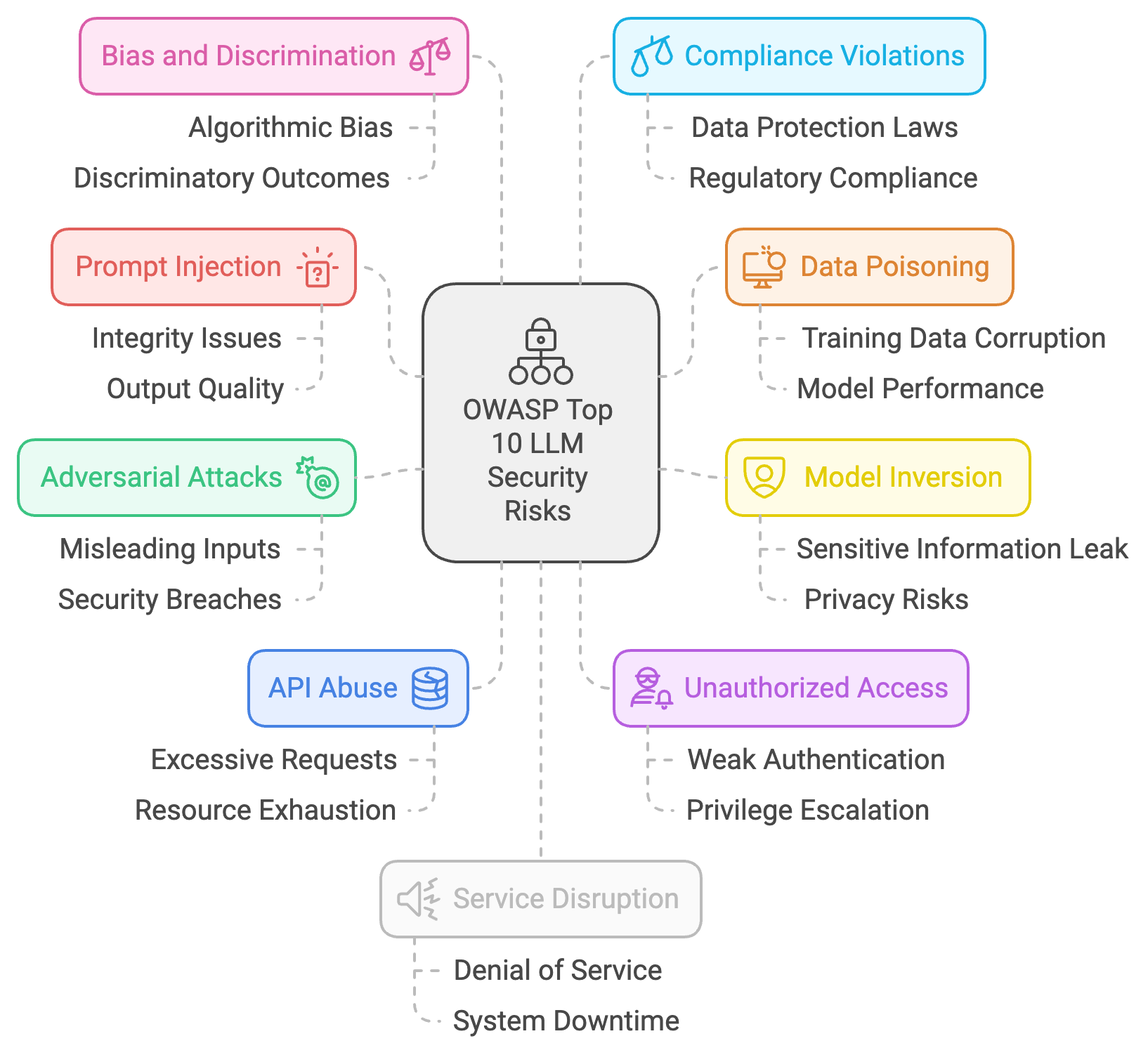

Listing Top 10 OWASP LLM Security Risks

Let’s briefly overview the OWASP Top 10 security risks for LLM.

- Prompt Injection: This security risk is where the attackers can manipulate the prompts fed to an LLM generating harmful responses. It affects the integrity and output quality of the model.

- Insecure Output Handling: this defines the insufficient balances and checks that cannot eliminate the spread of sensitive data. It can result in privacy breaches and the exposure of crucial data.

- Training Data Poisoning: The data used to train LLM gets tampered with and can corrupt the entire learning process for the model. it leads to skewing model outputs, which causes biased results.

- Model Denial of Service: it targets the LLM availability by weighing it with sophisticated and complex queries. It renders the model non-functional for authorized users.

- Supply Chain Vulnerabilities: It occurs when the services or components the model depends on are compromised. it causes system-spread vulnerabilities.

- Sensitive Information Disclosure: Such risks occur when LLMs reveal proprietary or personal data inadvertently in the results.

- Insecure Plugin Design: The lack of security in the LLM plugin design can cause vulnerabilities that degrade the entire system.

- Excessive Agency: It defines making autonomous decisions with no adequate human supervision. This can cause undesirable results.

- Overreliance: The LLM overreliance can cause dependency, in which the critical decision-making goes to the model without any appropriate verification.

- Model Theft: It involves having unauthorized access and copying the LLM configurations.

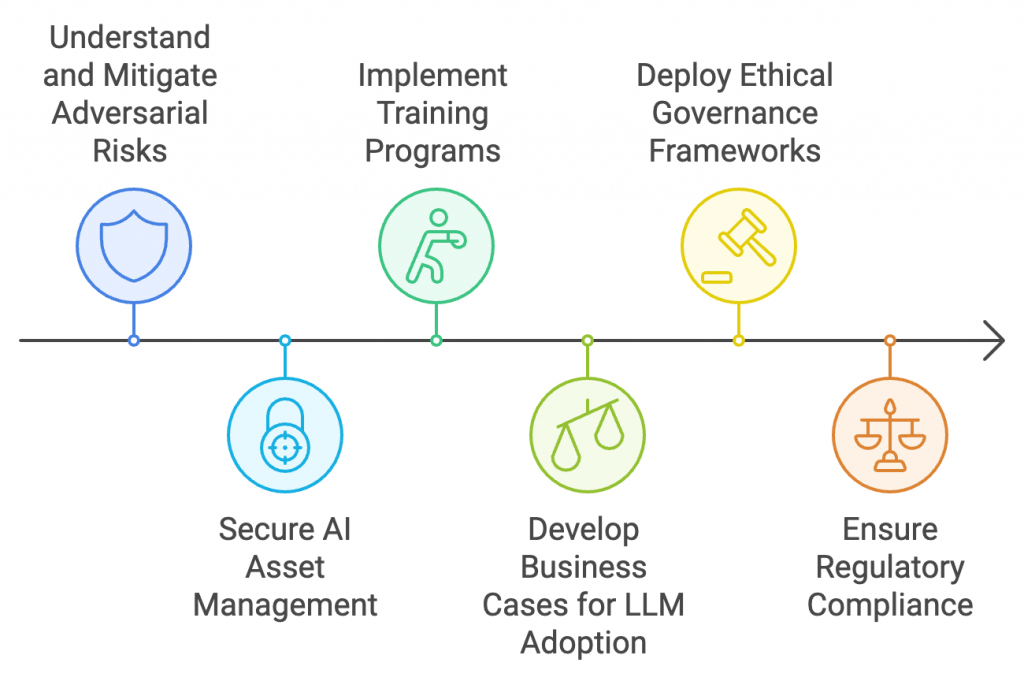

OWASP LLM AI Security & Governance Checklist

Adhering to the security and responsible usage of LLMs is mandatory to eliminate the emerging cybersecurity challenges.

LLM security OWASP and governance checklist provide a detailed list that advises defense mechanisms in LLM deployment. With due focus on guiding organizations along LLM in Cybersecurity complexities, it takes care of various factors like vulnerability and notification, adversarial risks, compliance mandates, and workforce training.

- Understanding adversarial risks and deploying adequate preventive measures.

- AI asset management to secure intellectual data and property.

- Implementing training programs for technical and non-technical technical workforce.

- Developing best business cases for LLM adoption.

- Deploying governance frameworks to follow ethical use.

- Adhering to regulatory compliance and legal obligations.

To quantify these factors, the following insights from the OWASP LLM Security & Governance checklist can help:

—Risk Management assessment and mitigation measures in case of possible threats to LLM integrity.

— AI asset management protection of algorithms and data governance. Employee training data to improve LLM security skills throughout the organizations.

— Educational workshops and online cybersecurity courses.

—Compliance management systems and Regulatory compliance to ensure LLM applications go well with the LLM security standards.

— Data protection regulations and industry-wide compliance checklists.

Ensuring LLM Security: Best Practices to Follow

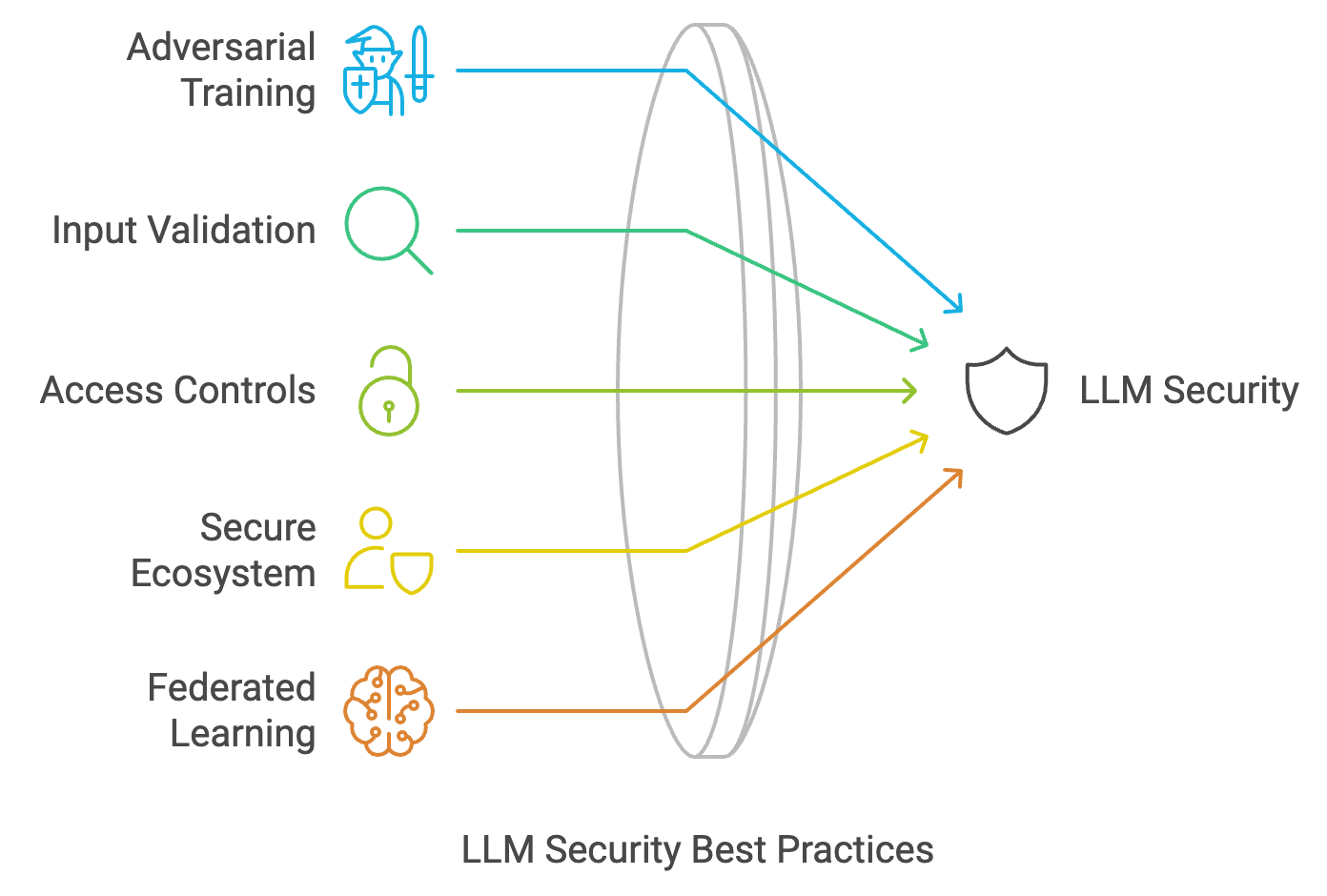

Here are certain measures you can adopt to ensure LLM security best practices:

Adversarial Training:

It involves the exposure of LLM to the Adversarial consequences right in the training phase. It enhances the resilience of LLM against potential attacks, and the model learns to respond to the manipulation which improves its security.

Integration of adversarial training with LLM deployment helps organizations generate highly secure AI systems that can withstand complex cyber threats.

Input Validation Mechanisms:

With input validation mechanisms, you can prevent inappropriate inputs or malicious LLM security threats from influencing LLM operations. These checks make sure that only appropriate data is processed and safeguard the model against input-based LLM attacks like prompt injection.

Adopting in-depth input validation helps to safeguard the functionality and security of LLM against any intrusion attempts that could result in misinformation or unauthorized access.

Adopting Access Controls:

Access controls confine LLM interactions to authorized applications or users thus safeguarding against data breaches or authorized access. It can include authorization, authentication, auditing mechanism, etc; which makes sure that the model is accessed with close control and monitoring techniques.

Following stringent access controls can help your organization eliminate the risk associated with or authorized access to LLMs, securing specific data and intellectual property.

Secure Execution Ecosystem

With secure execution and environments, LLM can isolate itself from dangerous external influences. Methods like containerization help to enhance security by limiting access to the run-time environment of models.

Developing a secure execution ecosystem for LLMs is integral in safeguarding the authenticity of AI processes. It also helps to avoid the exploitation of vulnerabilities within the operational infrastructure.

Implementing Federated Learning

With Federated Learning, LLMs can be trained across different servers or devices without data centralization, thereby minimizing privacy risks, and data exposure. With this collaborative approach, you can distribute the learning process and improve model security.

Deploying federated learning strategies helps to improve security and takes user privacy into account, making it helpful to develop secure LLM applications.

Adopting Differential Privacy Mechanisms

Differential privacy allows a randomness to the model output and data which eliminates identification of separate data points within the collection of data sets. It safeguards the user’s privacy while letting the model learn from the large data insights.

By adopting a differential privacy mechanism, you can ensure that the sensitive data is highly confidential, data security is enhanced, and users’ trust in AI systems is fostered.

Following Bias Mitigation Techniques

Bias mitigation techniques helped to manage and eliminate the existing LLM biases, which enabled fair outcomes. The approaches include rebalancing training, datasets, algorithmic adjustments, and frequent monitoring of output for bias. By actively evaluating, developers can improve the legal, ethical, and social responsibility of LLM applications.

Taking LLM Security Ahead with Wattlecorp

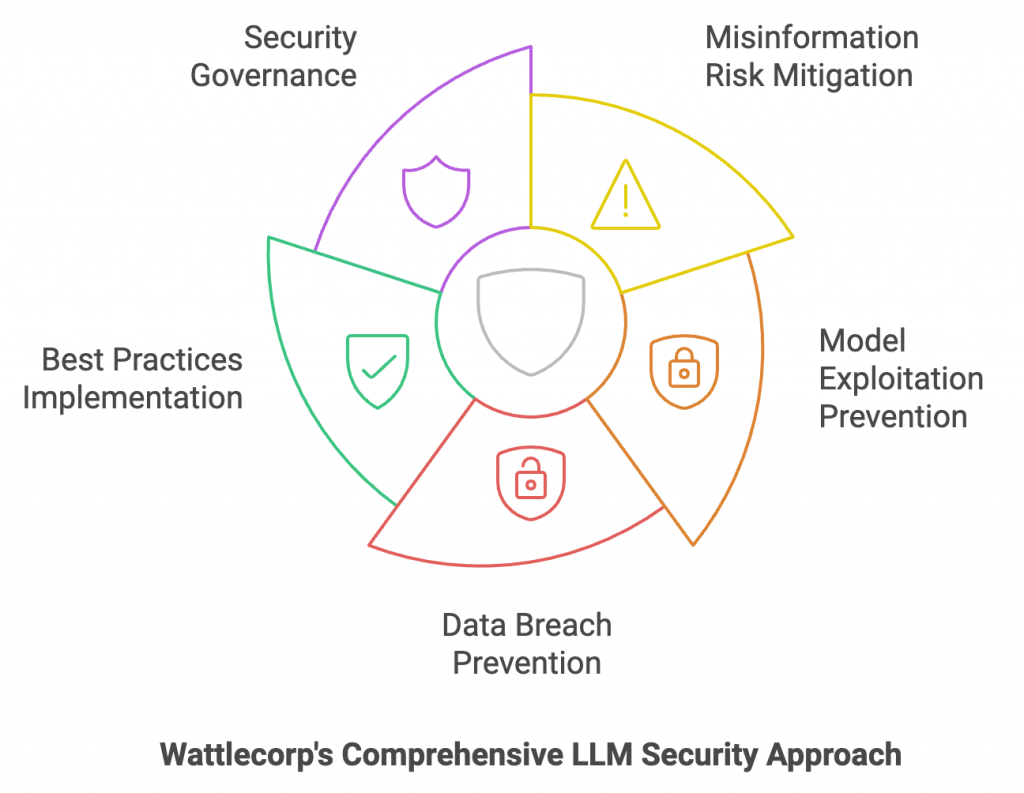

Wattlecorp stands at the forefront of LLM security companies, offering services for the best LLM security measures and enterprise-grade LLM AI security practices. With due recognition of the extreme importance of LLM for cybersecurity, Wattlecorp adopts a secure approach to combat misinformation risks, prevent model exploitation, fight data breaches, etc. By incorporating LLM best practices and giving a robust outlook to the organization’s security governance, we ensure the successful application of LLMs to your business. To know more about how our cybersecurity services can let you take your LLM security ahead, let’s talk!

Frequently Asked Questions

1. Are LLMs more vulnerable to attacks than traditional software?

Yes. LLMs or Large Language Models are usually more susceptible to specific kinds of attacks than conventional software. LLMs can be prone to adversarial attacks, in which the model might cause harmful outputs. Also, they can accidentally expose sensitive training data due to model inversion attacks. Different from the traditional software, LLMs move out of the deterministic logic to create responses based on data patterns, making it difficult to predict. This mitigates all possible vulnerabilities.

2. What are the frequent vulnerabilities in LLM models?

The common vulnerabilities in LLM models include when subjected to generating harmful content due to the lack of adequate training data. It could also occur due to the exposure to adversarial attacks, in which the malicious inputs can influence the model output.

3. How to select an LLM security provider?

While selecting an LLM security provider, you need to consider their expertise in eliminating risks such as adversarial attacks, data leakage and bias in model output. Make sure they provide robust monitoring measures, compliance with the regulations, and custom security features.